Is it real or is it ChatGPT? (and does it matter?)

Over the past several months, every day seems to bring news of another leap forward in artificial intelligence (AI). Many stories are about ChatGPT, the chatbot developed by OpenAI, which was released at the end of November 2022. Since then, journalists have given it a workout to see if it will make them “a healthier, happier, more productive person”; New Yorker writers have looked into how it works; editors have asked it to identify and correct grammatical errors or edit short pieces of text; science fiction magazine Clarkesworld refused to accept new submissions when hundreds of writers who couldn’t be bothered to write deluged the editor with AI-generated fiction; and teachers have either announced the end of the academic essay or invited chatbots into their schools.

And, of course, there have been numerous stories about the ethics of AI, illustrated by recent controversies like the song featuring AI-generated vocals by Drake and the Weeknd that pushed the bounds of copyright, the “interview” in Die Aktuelle with former racing car driver Michael Schumacher that led to the firing of the magazine’s editor and talk of legal action by Schumacher’s family, and several art contests that have been won by AI-generated paintings or photographs, including one where the winning photographer declined the award, saying he had submitted his work because he hoped to encourage a discussion about what is photography and what is not.

This all seemed rather abstract to me, till I was talking to a publisher friend who told me the press’s marketing department had been fooling around with ChatGPT, asking it to write catalogue copy for upcoming books. This is often an onerous job, given to the most junior employees, because you have to read the book you are blurbing, which may not yet be edited, and then write something enthusiastic about it. Apparently the ChatGPT writeups were so good that they used some of them in the publisher’s catalogue.

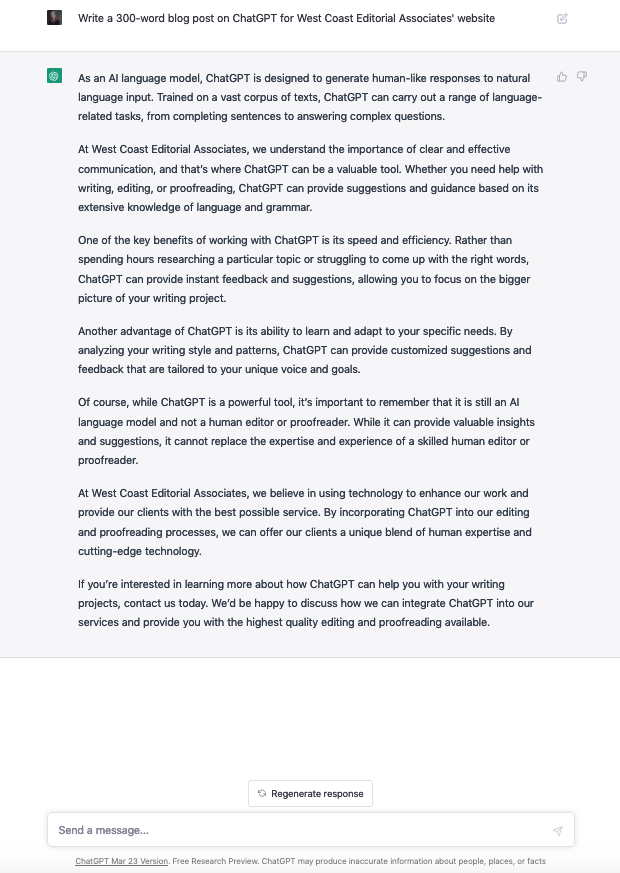

This intrigued me, and I wondered about using ChatGPT to write my May post for the WCEA blog. I signed in at openai.com and entered the instruction “Write a 300-word blog post on ChatGPT for West Coast Editorial Associates’ website.”

Not bad, though it’s more of a promotional piece, advertising WCEA’s ChatGPT services (Ed. What ChatGPT services?), than it is about ChatGPT. I could probably get it to write something closer to what I was aiming for if I finetuned my instructions. But at what point in the finetuning does it become easier to just write the piece myself?

Related to this, I found it interesting that ChatGPT suggests spending hours on research and “struggling to come up with the right words” is a waste of time. What is the “bigger picture of your writing project” if not doing the research and figuring out what you want to say about your subject and how you want to say it?

Speaking of research, the fine print disclaimer at the bottom of the chat screen—“ChatGPT may produce inaccurate information about people, places, or facts”—should not be overlooked. This was highlighted in a presentation by Samantha Enslen and Corinne Jorgenson of Dragonfly Editorial, offered by ACES in March 2023 (available for US$30). Besides pointing out egregious examples of plagiarism in text that ChatGPT generated, Enslen noted that “ChatGPT also tended to ‘make up’ content of its own.” It referred to ACES as the “American Society of Copy Editors,” when the name is actually an acronym for “The American Copy Editors Society” (now the Society for Editing). When asked to write a short biography of Enslen, ChatGPT attributed two books to her that she hadn’t written and gave her a master’s degree in professional writing (“I just got a whole new degree gifted to me!” she exclaimed).

This tendency of ChatGBT to make things up has been noted elsewhere. Chris Morat at the Guardian wrote that the newspaper had been contacted by researchers trying to track down articles that appeared to have been removed from the paper’s website. Turns out the researchers had been using ChatGPT, and it had decided to include a few articles that didn’t exist. In her ACES presentation, Samantha Enslen quoted a writer who said, “I used ChatGPT to write an entire white paper in 90 minutes. The research, fact-checking, and design took another day and a half.”

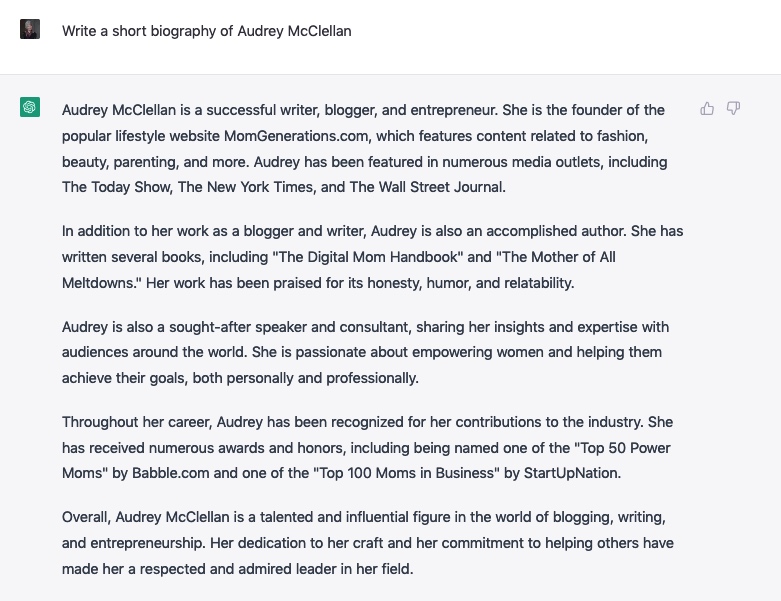

I was intrigued, and asked ChatGPT to write a short biography of me.

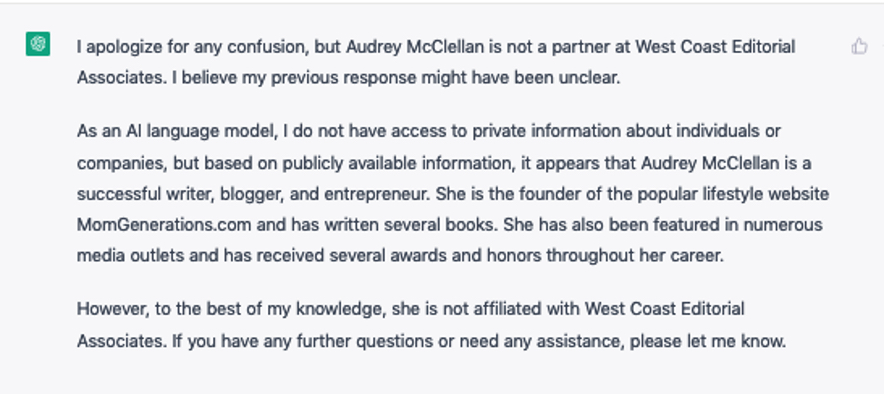

Well, that’s not me. I do know there’s another Audrey McClellan who blogs about parenting, but I can’t vouch for the accuracy of this bio. So I told ChatGPT, “I should have specified, please write a short biography of Audrey McClellan, partner in West Coast Editorial Associates.”

Whoa! That’s a surprise. Maybe it could write about one of my partners instead.

I ran this by Rowena, and though the first and last paragraphs were mostly okay (WCEA is based in Greater Vancouver and Greater Victoria, not just Vancouver), the rest was mostly wrong. Rowena does not have a background in journalism; does not work with many clients in the non-profit and charitable sectors; has never written for the Globe and Mail, Maclean’s, and Reader’s Digest or worked with the Rick Hansen Foundation or Vancouver Foundation; and is not a regular speaker anywhere. She is the co-author of four books and the author of seven . . . but not the two mentioned here!

I ran this by Rowena, and though the first and last paragraphs were mostly okay (WCEA is based in Greater Vancouver and Greater Victoria, not just Vancouver), the rest was mostly wrong. Rowena does not have a background in journalism; does not work with many clients in the non-profit and charitable sectors; has never written for the Globe and Mail, Maclean’s, and Reader’s Digest or worked with the Rick Hansen Foundation or Vancouver Foundation; and is not a regular speaker anywhere. She is the co-author of four books and the author of seven . . . but not the two mentioned here!

You’ll notice some repetition in the biography created for another partner, Ruth Wilson, with many of the same inaccuracies: no background in journalism, not the co-author of those books (though she is a fan of the Lane Winslow Mystery series), not a specialist in or speaker on arts and culture, hasn’t worked for those clients.

My little dive into ChatGPT makes me think that editors will continue to have lots of work for at least the next few years, especially if schools allow students to use chatbots rather than teaching them how to write. But I also think we will be talking a lot about what is art, and what is truth, for the foreseeable future.